Text-To-Speech

Our Text-To-Speech component processes text and plays audio with matching facial animation.

Approach

The realtime rig is setup by the loader class and imports alls FACS and blendshapes from the didimo package. We use a third-party service (i.e. Amazon Polly) to generate audio and the corresponding timing data of phonemes which the viseme player uses to match with the corresponding viseme when playing animations. With SSML you can control various aspects of speech, such as pronunciation, volume, pitch, and speech rate.

For more information, see Generating Speech from SSML Documents

Visemes

We use Amazon's visemes which are based on a mesh called Rin.

Rin has: 1 silence pose + 16 poses (has another one that is not being used) + smile + frown.

| Frame | Viseme |

|---|---|

| 0 | silence |

| 8 | p |

| 16 | t |

| 24 | SS |

| 32 | TT |

| 40 | f |

| 48 | k |

| 56 | i |

| 64 | r |

| 72 | s |

| 80 | u |

| 88 | amper |

| 96 | a |

| 104 | e |

| 112 | EE |

| 120 | o |

| 128 | OO |

NOTE: The frame positions presented in the table were being used in the deprecated animation system. Currently, the realtime rig handles the visemes directly.

Phonemes

The facial rig mapping goes as following:

| Viseme | Phoneme |

|---|---|

| p | phoneme_p_b_m |

| t | phoneme_d_t_n |

| SS | phoneme_s_z |

| TT | phoneme_d_t_n |

| f | phoneme_f_v |

| k | phoneme_k_g_ng |

| i | phoneme_ay |

| r | phoneme_r |

| s | phoneme_s_z |

| u | phoneme_ey_eh_uh |

| amper | phoneme_p_b_m |

| a | phoneme_aa |

| e | phoneme_ae_ax_ah |

| EE | phoneme_ey_eh_uh |

| o | phoneme_ao |

| OO | phoneme_aw |

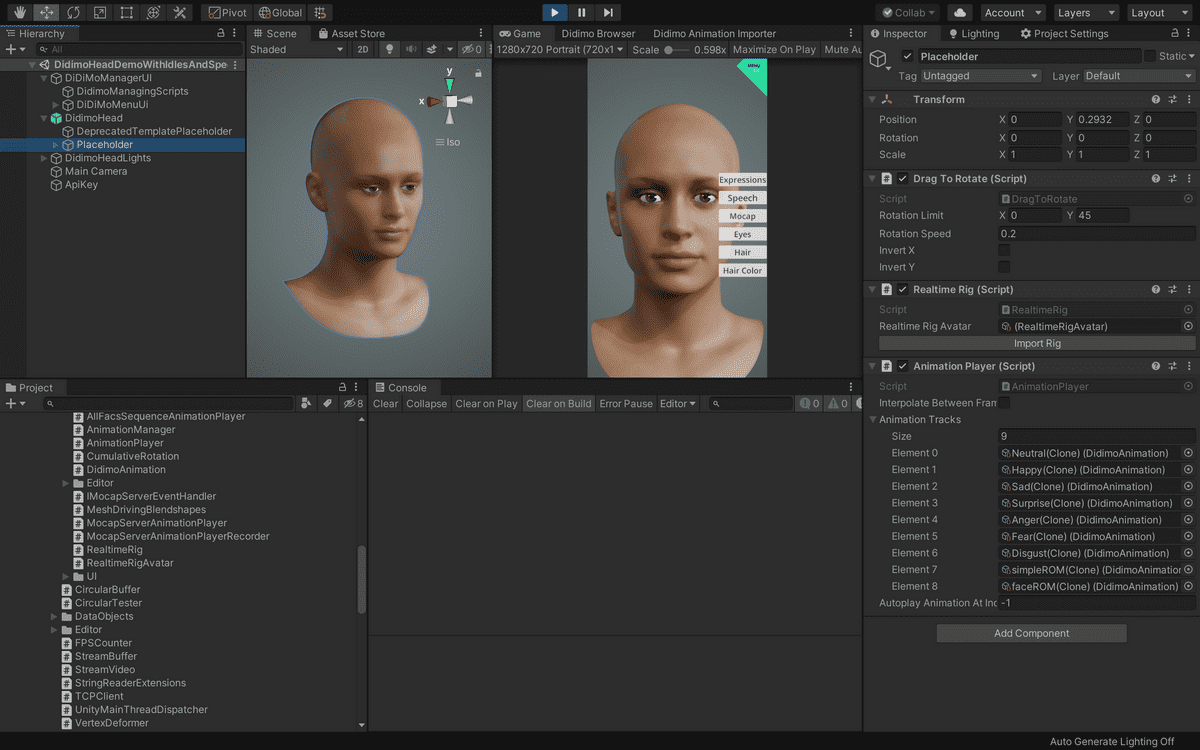

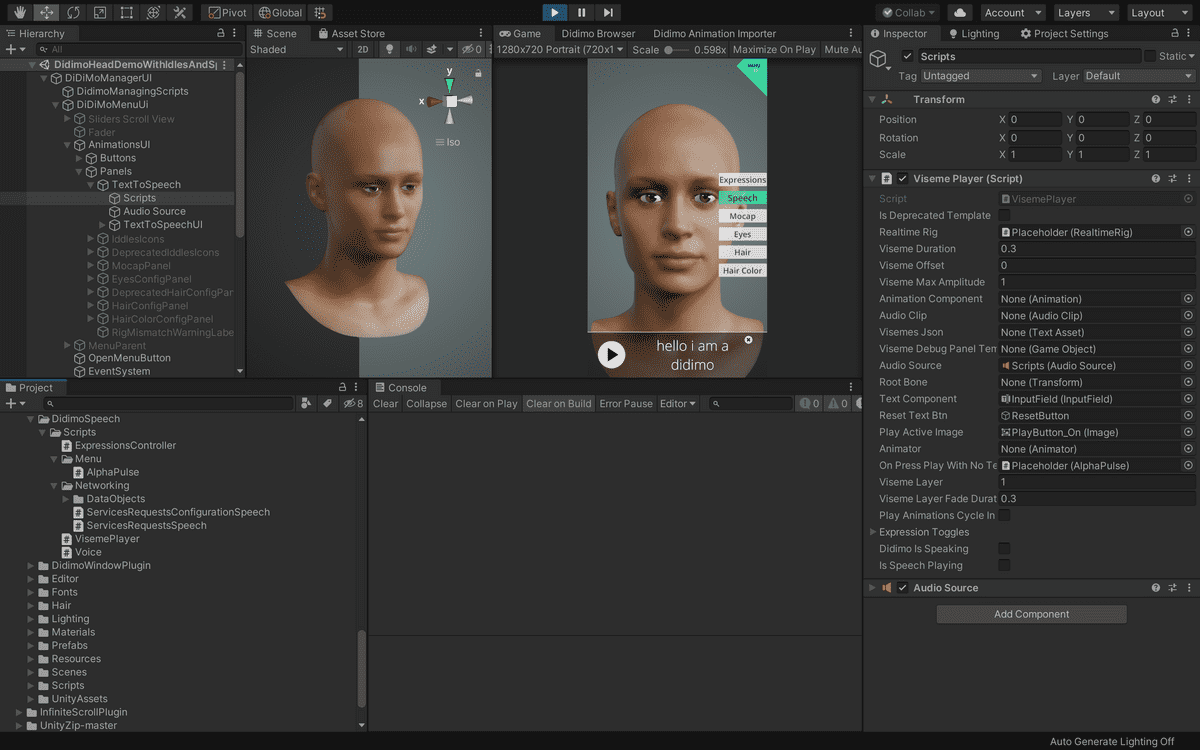

Setup Of The Viseme Player

The instantiation tool will handle the setup of the realtime rig and the realtime rig avatar, which the viseme player uses to play text-to-speech animations.

Currently, the realtime rig holds 23 blendshapes to match the phonemes referenced in the section above.

The viseme player will then be able to control the active viseme through the rig.

//Viseme Player Class

private void Update()

{

// Reset the pose on update. If we have other animations playing (e.g. idle animations), this won't override them

if (audioSource != null && audioSource.isPlaying && visemes != null && isDeprecatedTemplate)

{

ResetPose();

}

if (didimoIsSpeaking && audioSource.isPlaying && !isDeprecatedTemplate)

{

UpdatePoseForTimeImpl(audioSource.time);

}

}

void UpdatePoseForTimeImpl(float time)

{

List<InterpolationViseme> visemesToInterpolate = GetVisemesForInterpolation(time + visemeOffset);

realtimeRig.ResetAll();

foreach (InterpolationViseme interpolationViseme in visemesToInterpolate)

{

PlayMatchingRealtimeRigVisemeFromPhoneme(interpolationViseme.animation, interpolationViseme.weight);

}

}

public void PlayMatchingRealtimeRigVisemeFromPhoneme(string phoneme, float weight)

{

string viseme_name;

// map between thename of the phone and the name of the viseme in the FACS we support in the realtime rig

// (switch-case tables are compiled to constant hash jump tables)

switch (phoneme)

{

case "sil": viseme_name = ""; break;

case "p": viseme_name = "phoneme_p_b_m"; break;

case "t": viseme_name = "phoneme_d_t_n"; break;

case "SS": viseme_name = "phoneme_s_z"; break;

case "TT": viseme_name = "phoneme_d_t_n"; break;

case "f": viseme_name = "phoneme_f_v"; break;

case "k": viseme_name = "phoneme_k_g_ng"; break;

case "i": viseme_name = "phoneme_ay"; break;

case "r": viseme_name = "phoneme_r"; break;

case "s": viseme_name = "phoneme_s_z"; break;

case "u": viseme_name = "phoneme_ey_eh_uh"; break;

case "&": viseme_name = "phoneme_aa"; break;

case "@": viseme_name = "phoneme_aa"; break;

case "a": viseme_name = "phoneme_ae_ax_ah"; break;

case "e": viseme_name = "phoneme_ey_eh_uh"; break;

case "EE": viseme_name = "phoneme_ao"; break;

case "o": viseme_name = "phoneme_ao"; break;

case "OO": viseme_name = "phoneme_aw"; break;

default: viseme_name = ""; break;

}

realtimeRig.SetBlendshapeWeightsForFac(viseme_name, weight, true);

}

We include a fully working user interface in the Unity SDK. You can learn more on this topic by exploring the sample scene, and how to use our scripts for connecting to the Didimo API, instantiate didimos, and play text-to-speech animations.

Go to Exploring the sample scenes

Last updated on 2020-10-06